Part 1/2

How this project started: curiosity first, use case second

This project started very simply: by sparring with ChatGPT about what kind of AI-powered system could realistically help me in my day-to-day work in B2B marketing and GTM. I wasn’t trying to build a perfect product. My primary goal was learning.

I wanted to understand, hands-on, what is actually possible with AI tools when you approach them from a marketer’s and strategist’s perspective — not from a software engineer’s point of view. The idea of building something super useful came second.

This framing mattered. It gave me permission to experiment, to build imperfectly, to break things, and to debug my way forward without aiming for a polished end result from day one.

Tools and building blocks used

Before getting into what I built, it’s useful to be transparent about how I built it.

This project was not about mastering a single tool. It was about understanding how different components can work together as a system, and learning where things break in practice.

The main tools and building blocks I used were:

- ChatGPT for ideation, reasoning, prompt design, and debugging support

- Make for orchestration, automation, and connecting different steps into workflows

- RSS feeds as the first structured signal source

- Airtable as structured storage for signals, enrichment, and iteration

- Large language models (LLMs) for extraction, summarization, and classification

- Prompt-based logic instead of rigid rules wherever possible

A large part of the learning came from debugging moments — when outputs didn’t make sense, classifications were inconsistent, or assumptions didn’t hold. Over time, I started to recognize patterns and fix issues independently, rather than relying on step-by-step guidance.

Defining the core problem: GTM signal detection at scale

The core problem I wanted to explore was GTM signal detection.

In B2B marketing and go-to-market roles, important signals are everywhere:

- Company announcements

- Hiring activity

- Funding rounds

- Product launches

- Market entries

The challenge is not access to information — it’s structure, relevance, and prioritization.

I defined “success” for this project as:

- Turning fragmented updates into structured signals

- Adding business context that makes signals understandable

- Making outputs usable for non-technical stakeholders

This definition guided all later decisions.

First build: signal ingestion as the foundation

I started with RSS feeds because they are structured, predictable, and quick to prototype with. This allowed me to focus on learning system design rather than fighting data chaos immediately.

Even at this stage, debugging became a core part of the work:

- Different feeds had different formats

- Some sources were consistently low quality

- Signal volume varied widely

Each issue forced small design decisions and taught me how fragile early systems can be without normalization.

What I built in this phase

- Signal ingestion layer

- Automated update collection

- Source normalization logic

This is how my first Make.com scenario looks like:

Turning raw signals into meaning: AI extraction and summarization

Raw inputs are not insights. A long article or announcement rarely communicates its relevance clearly without interpretation.

This is where AI became useful as an extraction layer. The goal was not creativity, but precision:

- Identify the core event

- Extract GTM-relevant facts

- Produce summaries optimized for scanning

This phase included a lot of trial and error. Early outputs were either too generic or overly detailed. Through repeated debugging and prompt refinement, I learned how small changes in instruction dramatically affect output quality.

What I built in this phase

- AI extraction layer

- Automated summaries

- Relevance filtering

Adding business context: enrichment layers

Once extraction worked reasonably well, it became obvious that what happened is only half the story. Context is what turns updates into insights.

I added multiple enrichment layers to provide that context:

- Estimating company stage

- Inferring geographic focus

- Distinguishing B2B from B2C companies

This was one of the most educational parts of the project. Outputs were not always clean or consistent, which forced me to iterate logic, adjust constraints, and learn when to guide the model more explicitly.

What I built in this phase

- Context enrichment

- Stage classification

- Country inference

- B2B classification layer

Structuring trust: confidence and quality signals

Not all AI outputs should be treated equally.

To make the system usable, I introduced confidence indicators that help assess how much trust to place in each signal. These were based on factors such as:

- Source reliability

- Signal clarity

- Consistency across extracted data

This step reinforced an important lesson: AI systems should help users make decisions, not silently make them on their behalf.

What I built in this phase

- Confidence scoring

- Quality indicators for prioritization

From experiments to a system: structured storage

At this point, structure became essential.

By storing outputs in a structured format, I could:

- Filter and compare signals

- Iterate on logic without starting over

- Lay the foundation for dashboards and analysis

This phase involved frequent breakage: schema changes, edge cases, and fixes I had to reason through independently. Each issue improved my understanding of how systems evolve beyond initial prototypes.

What I built in this phase

- Structured storage

- Reusable data model

- Foundations for analysis and visualization

What exists today

Putting everything together, the system now includes:

- Signal ingestion

- AI extraction

- Context enrichment

- Stage classification

- Country inference

- Confidence scoring

- B2B classification layer

- Structured storage

This is no longer automation experimentation.

It is an AI-powered GTM intelligence engine — designed to surface meaningful signals, enriched with business context, and structured for reuse.

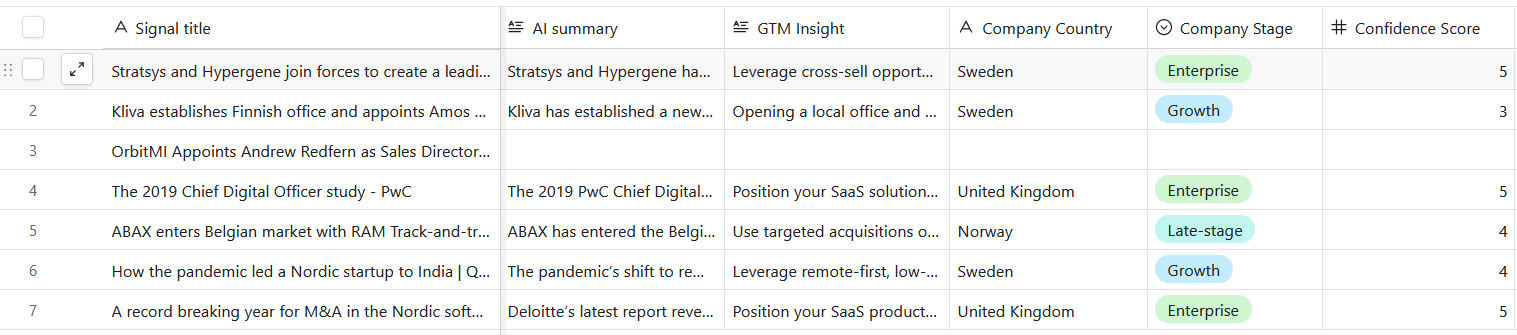

This is part of the Airtable view.

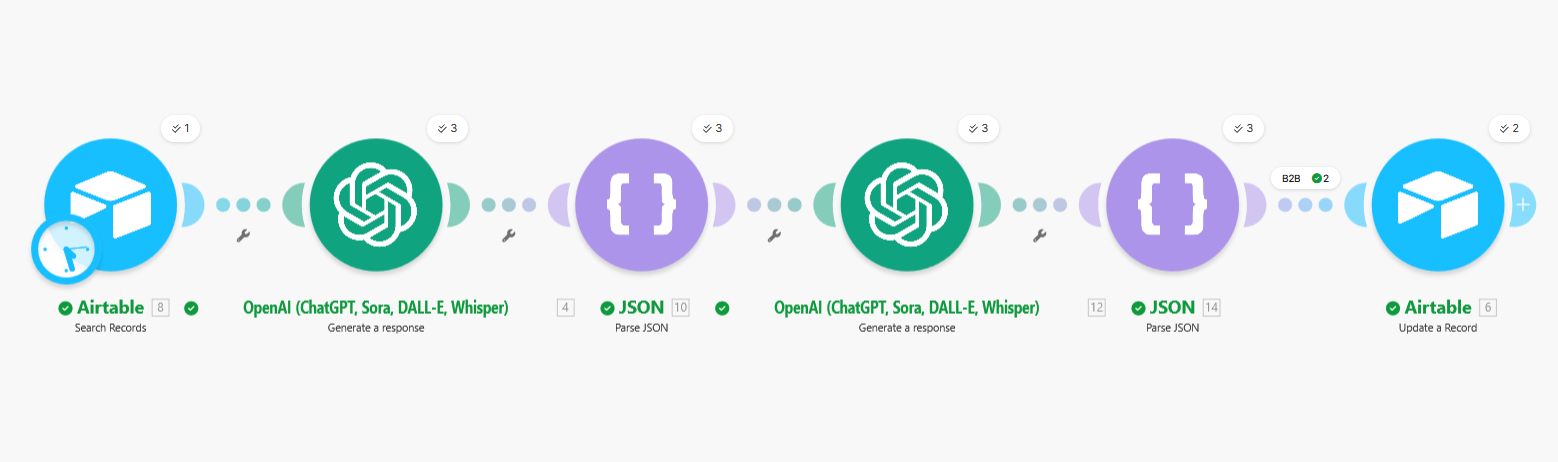

This is how my second Make.com scenario looks like:

What I learned along the way

This project reinforced several key lessons:

- Debugging is where real learning happens

- AI systems require iteration, not perfection

- Clear questions matter more than complex logic

- You don’t need to be an engineer to design intelligent systems — but you do need structured thinking

What’s next (part 2)

Part 2 of this project will focus on the next phase:

- Expanding data sources

- Improving usability

- Moving closer to a more concrete, user-facing solution

The next iteration will also include experimentation with Lovable and deeper exploration of how this kind of system could be applied in real GTM workflows.

Overall time used for part 1 was about 10 hours. Most of the tools, expect ChatGPT, were fairly new to me. All tools were free versions, except Chat GPT where I did buy the cheapest paid plan, 8 eur/m that was enough. I also bought credits from Open AI to use the API Key. I didn’t run huge volumes for this test to save energy and nature 🌱 I was able to see it working very well with only very little data.

I know this is just the beginning but the confidence boost doing this gave me! I can do this and much more 😍 TBH, it wasn’t even that hard when ChatGPT was helping me in every step. I have already so many ideas what to do next ✨

This project started as a learning exercise. It turned into a working system — and, more importantly, a much deeper understanding of how AI can realistically support modern B2B marketing and GTM work.